00 Intro to class

Stat 406

Geoff Pleiss, Trevor Campbell

Last modified – 04 September 2024

\[ \DeclareMathOperator*{\argmin}{argmin} \DeclareMathOperator*{\argmax}{argmax} \DeclareMathOperator*{\minimize}{minimize} \DeclareMathOperator*{\maximize}{maximize} \DeclareMathOperator*{\find}{find} \DeclareMathOperator{\st}{subject\,\,to} \newcommand{\E}{E} \newcommand{\Expect}[1]{\E\left[ #1 \right]} \newcommand{\Var}[1]{\mathrm{Var}\left[ #1 \right]} \newcommand{\Cov}[2]{\mathrm{Cov}\left[#1,\ #2\right]} \newcommand{\given}{\ \vert\ } \newcommand{\X}{\mathbf{X}} \newcommand{\x}{\mathbf{x}} \newcommand{\y}{\mathbf{y}} \newcommand{\P}{\mathcal{P}} \newcommand{\R}{\mathbb{R}} \newcommand{\norm}[1]{\left\lVert #1 \right\rVert} \newcommand{\snorm}[1]{\lVert #1 \rVert} \newcommand{\tr}[1]{\mbox{tr}(#1)} \newcommand{\brt}{\widehat{\beta}^R_{s}} \newcommand{\brl}{\widehat{\beta}^R_{\lambda}} \newcommand{\bls}{\widehat{\beta}_{ols}} \newcommand{\blt}{\widehat{\beta}^L_{s}} \newcommand{\bll}{\widehat{\beta}^L_{\lambda}} \newcommand{\U}{\mathbf{U}} \newcommand{\D}{\mathbf{D}} \newcommand{\V}{\mathbf{V}} \]

About us

Geoff Pleiss

Assistant Professor, Department of Statistics

Trevor Campbell

Associate Professor, Department of Statistics

Wait, there’s two of you?

Geoff & Trevor are co-teaching this course!

Think of the two of us as interchangeable people.

(It’s not that hard. We’re very similar.)

- We will both be present at (almost) all lectures

- We will roughly alternate who is giving the lecture

- We are both in charge of course material / course policies / grades / etc.

Philosophy of the class

We and the TAs are here to help you learn. Ask questions.

We encourage engagement and curiosity

We favour steady work through the term (vs. sleeping until finals)

The assessments attempt to reflect this ethos.

More philosophy

When the term ends, we want

- You to be better at coding.

- You to have an understanding of the variety of methods available to do prediction and data analysis.

- You to articulate their strengths and weaknesses.

- You to be able to choose between different methods using your intuition and the data.

We do not want

- You to be under undo stress

- You to feel the need to cheat, plagiarize, or drop the course

- You to feel treated unfairly.

Health/COVID Policies (TL; DR)

- Attend class whenever you are healthy

- We encourage you to wear a mask if you want

- Do NOT come to class if you are possibly sick

- The Marking scheme is flexible enough to allow some missed classes

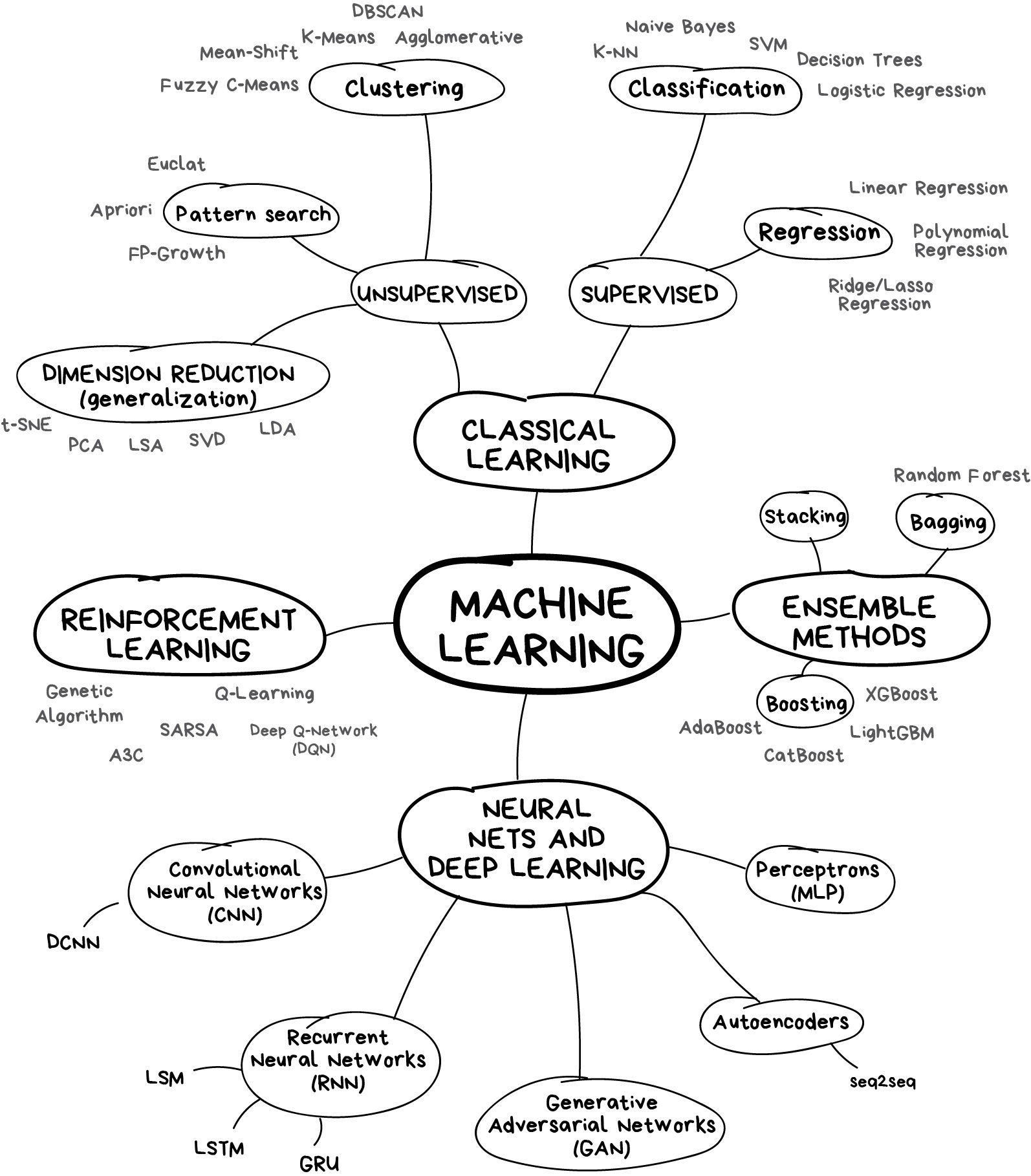

Course map

What this course is not

What this course is not

What this course is not

What this course is

5 easy steps to use scikit-learnEverything there is to know about machine learningThe hypeist new machine leraning models- The fundamentals for developing strong intuitions/understanding about ML

Predictive models

1. Preprocessing

centering / scaling / factors-to-dummies / basis expansion / missing values / dimension reduction / discretization / transformations

2. Model fitting

Which box do you use?

3. Prediction

Repeat all the preprocessing on new data. But be careful.

4. Postprocessing, interpretation, and evaluation

We will focus mostly on 1 and 4.

Source: https://vas3k.com/blog/machine_learning/

6 modules

- Review (today and next week)

- Model accuracy and selection

- Regularization, smoothing, trees

- Classifiers

- Modern techniques (classification and regression)

- Unsupervised learning

Each module is approximately 2 weeks long

Each module is based on a collection of readings and lectures

Each module (except the review) has a homework assignment

Assessments

Effort-based

Total across three components: 65 points, any way you want

- Labs, up to 20 points (2 each)

- Assignments, up to 50 points (10 each)

- Clickers, up to 10 points

effort_grade = max(65, labs + assignments + clickers)

Knowledge-based

Final Exam, 35 points

Labs / Assignments

The goal is to “Do the work”

Assignments

Not easy, especially the first 2, especially if you are unfamiliar with R / Rmarkdown / ggplot

You may revise to raise your score to 7/10, see Syllabus. Only if you lose 3+ for content (penalties can’t be redeemed).

Don’t leave these for the last minute

Labs

Labs should give you practice, allow for questions with the TAs.

They are due at 2300 on the day of your lab, lightly graded.

You may do them at home, but you must submit individually (in lab, you may share submission)

Labs are lightly graded

Clickers

Questions are similar to the Final

0 points for skipping, 2 points for trying, 4 points for correct

- Average of 3 = 10 points (the max)

- Average of 2 = 5 points

- Average of 1 = 0 points

total = max(0, min(5 * points / N - 5, 10))

Be sure to sync your device in Canvas.

Don’t do this!

Average < 1 drops your Final Mark 1 letter grade.

A- becomes B-, C+ becomes D.

Final Exam

Scheduled by the university.

It is hard

The median last year was 50% \(\Rightarrow\) A-

Philosophy:

If you put in the effort, you’re guaranteed a C+.

But to get an A+, you should really deeply understand the material.

No penalty for skipping the final.

If you’re cool with C+ and hate tests, then that’s fine.

Late policy

If you have not submitted your lab/assignment by the time grading starts, you will get a 0.

| When you submit | Likelihood that your submission gets a 0 |

|---|---|

| Before 11pm on due date (i.e. on time) | 0% |

| 11:01pm on due date | 0.01% |

| 9am after due date | 50% |

| 2 weeks after due date | 99.99999999% |

Late policy

We will only make exceptions when you have grounds for academic consession. (See the UBC policy.)

Tip

Remember: you can still get a “perfect” effort grade even if you get a 0 on one assignment.

Time expectations per week:

Coming to class – 3 hours

Reading the book – 1 hour

Labs – 1 hour

Homework – 4 hours

Study / thinking / playing – 1 hour

Questions?

Textbooks

An Introduction to Statistical Learning

James, Witten, Hastie, Tibshirani, 2013, Springer, New York. (denoted [ISLR])

Available free online: http://statlearning.com/

The Elements of Statistical Learning

Hastie, Tibshirani, Friedman, 2009, Second Edition, Springer, New York. (denoted [ESL])

Also available free online: https://web.stanford.edu/~hastie/ElemStatLearn/

It’s worth your time to read.

If you need more practice, read the Worksheets.

Computer

We will use R and we assume some background knowledge.

Suggest you use RStudio IDE

See https://ubc-stat.github.io/stat-406/ for what you need to install for the whole term.

Links to useful supplementary resources are available on the website.

This course is not an intro to R / python / MongoDB / SQL.

Other resources

- Canvas (minimal)

- Quiz 0, grades, course time/location info, links to videos from class

- Course website

- All the material (slides, extra worksheets) https://ubc-stat.github.io/stat-406

- Slack

- Discussion board, questions

- Github

- Homework / Lab submission

All lectures will be recorded and posted

We cannot guarantee that they will all work properly (sometimes we mess it up)

Some more words

Lectures are hard. It’s 8am, everyone’s tired.

Coding is hard. We hope you’ll get better at it.

We strongly urge you to get up at the same time everyday. It’s really hard to sleep in until 10 on MWF and make class at 8 on T/Th.

We have to give you a grade, but we want that grade to reflect your learning and effort, not other junk.

If you need help, please ask.

Questions?

Some things to see on the website

- Read the syllabus (See also Lab 0)

- Links to slides, how to download / print, browse source code

- Install the R package, read documentation, check your LaTeX installation

- BE SURE to follow the Computer Setup instructions!

- Worksheets for extra help.

- Read the FAQ!

- View the Course GitHub (once you have access)

UBC Stat 406 - 2024